Built for AI: ProLabs Transceivers & Cabling

Artificial Intelligence (AI) and Machine Learning (ML) are driving a new era of high-performance computing. These workloads demand infrastructure that delivers ultra-low latency, high bandwidth, and scalable speed.

ProLabs supports this evolution with a complete portfolio of Nvidia/Mellanox-compatible transceivers and cabling solutions. Our optics make it easy to upgrade to 400G and 800G networks while maintaining platform compatibility, thermal performance, and data integrity, designed to meet the demands of AI-driven environments.

The Impact of AI on Data Center Networks

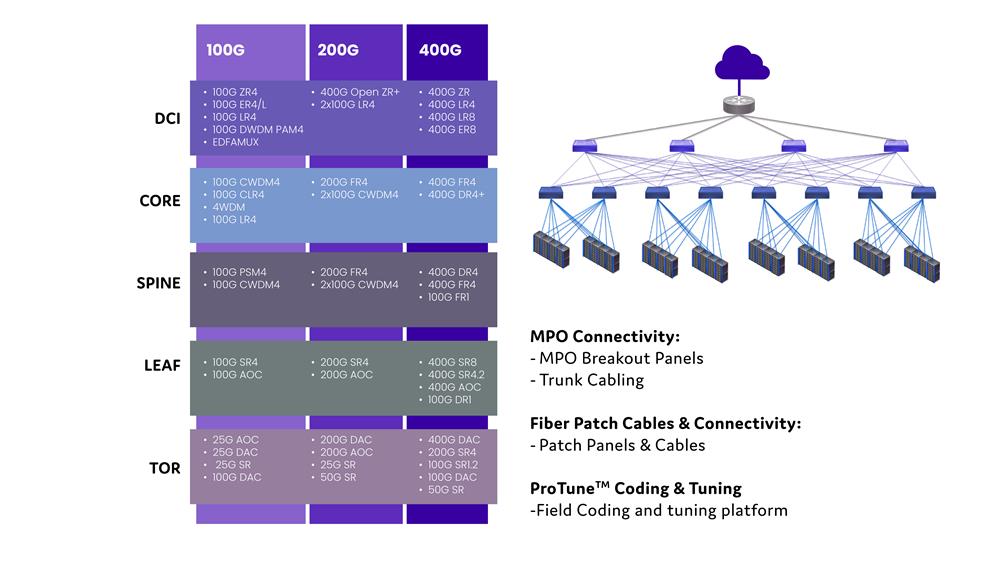

A Comprehensive Infrastructure Overview

AI platforms like ChatGPT, DALL·E, and Deepbrain are driving exponential growth in compute needs. These applications require massive throughput and operate with latency thresholds that push traditional networks to their limits.

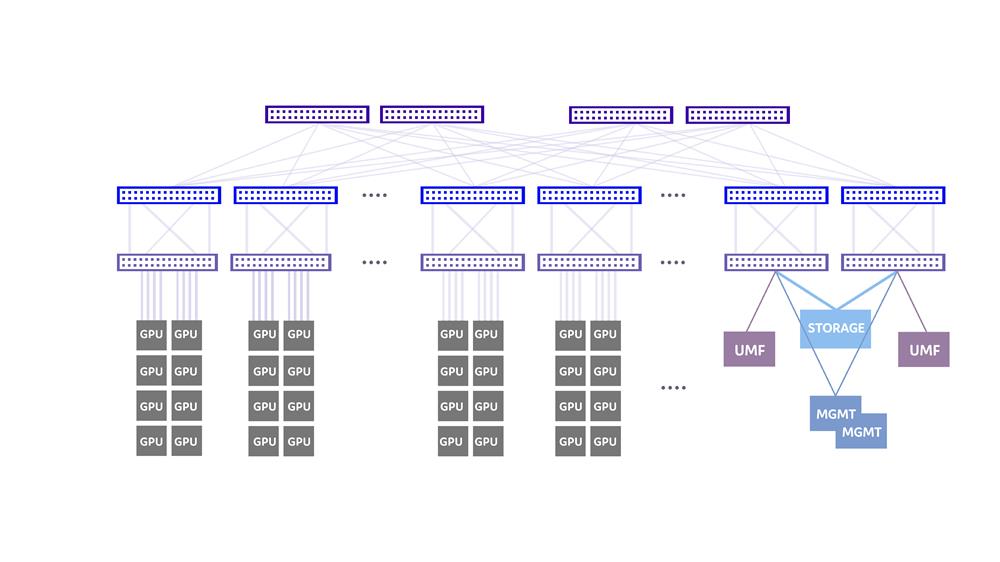

In an AI cluster, GPUs, CPUs, and storage behave like components on a motherboard constantly exchanging data at high speeds. This creates a need for contention less, high-bandwidth architectures that can keep pace with AI and ML workloads.

To meet these needs, operators must adopt transceivers that can handle intensive traffic volumes and offer reliable performance at scale. ProLabs high-speed optical solutions are optimized for 400G and 800G environments and are purpose-built to support the next generation of AI-ready data centers.

AI/ML Cluster Data Center Design

Advanced 200G, 400G, 800G Infrastructure

Reference Architecture for AI/ML Clusters

Traditional top-of-rack (ToR) setups are evolving. In modern AI networks, nodes connect directly to leaf switches, reducing latency and simplifying topology. This direct architecture is essential for high-speed AI applications that rely on real-time access to compute and storage.

ProLabs transceivers enable these designs with low-latency, high-density connectivity across the cluster, optimized for both training and inference workloads.

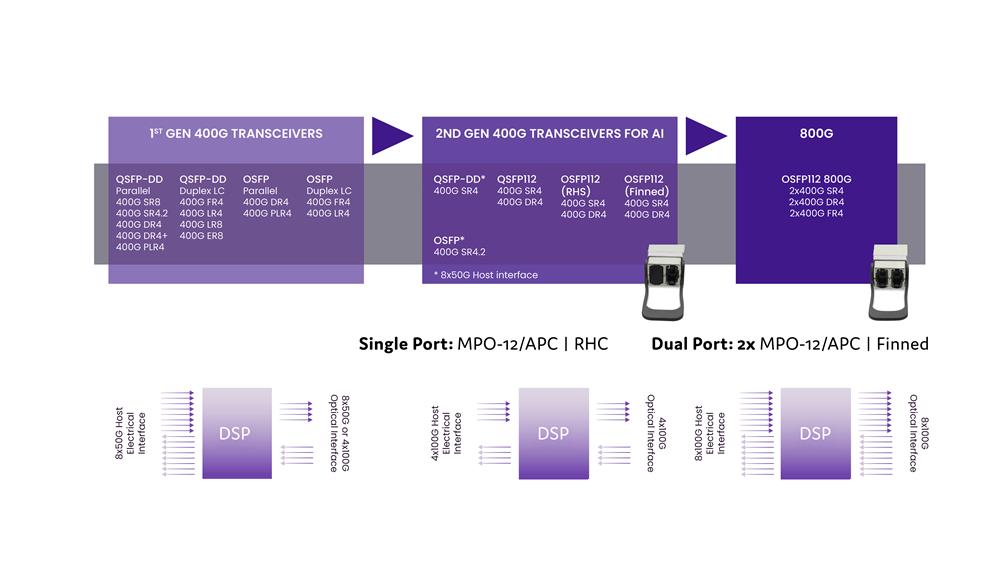

Transceivers from 400G to 800G

Scalable Optical Solutions for AI Environments

Platform Compatibility You Can Count On

Successful AI/ML deployments require transceivers that integrate cleanly across diverse platforms. Not all switches or network adapters support the same features so careful component selection is critical.

Some adapters may not support QSFP56 SR2 (100G PAM4) or breakout modes. Others may be limited to Ethernet or InfiniBand. That’s why ProLabs validates every product for MSA compliance and platform interoperability, ensuring your deployment is seamless and future-ready.

We also support the OSFP-RHS form factor, designed for better heat dissipation in compact environments. With support for 400G and 112G, it ensures compatibility with server PCIe slots while meeting the thermal and performance needs of dense AI clusters.

Designed for Versatile Applications

- QSFP56-DD Ports

- Support 400G QSFP-DD, 200G QSFP28-DD, 200G QSFP56, and 100G QSFP28.

- QSFP56 Ports

- Support 200G QSFP56 and 100G QSFP28.

- QSFP112 Ports

- Support 400G QSFP112, 200G QSFP56, and 100G QSFP28.

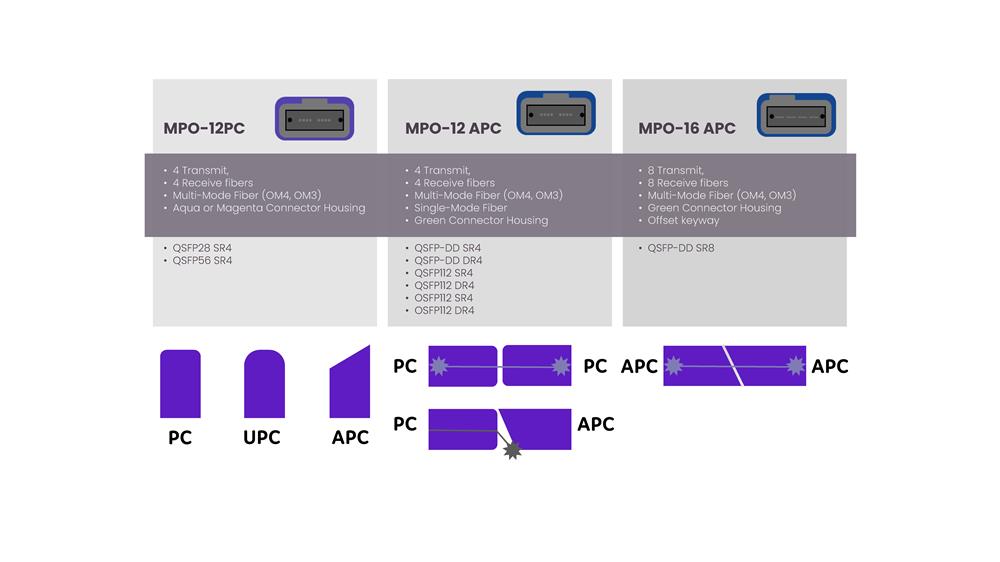

MPO Cabling Considerations

Patch Cable Guidelines for 400G and Legacy Transceivers

Transceiver performance depends on using the correct cabling standard.

- 400G SR4 optics require MPO/APC multimode fiber, reducing signal reflection and improving performance at high data rates.

- Legacy SR4 optics use MPO/PC cabling, designed for lower-speed transmission while maintaining solid link integrity.

ProLabs offers a full range of patch cables engineered for optimal performance in both legacy and high-speed deployments.